光线投射#

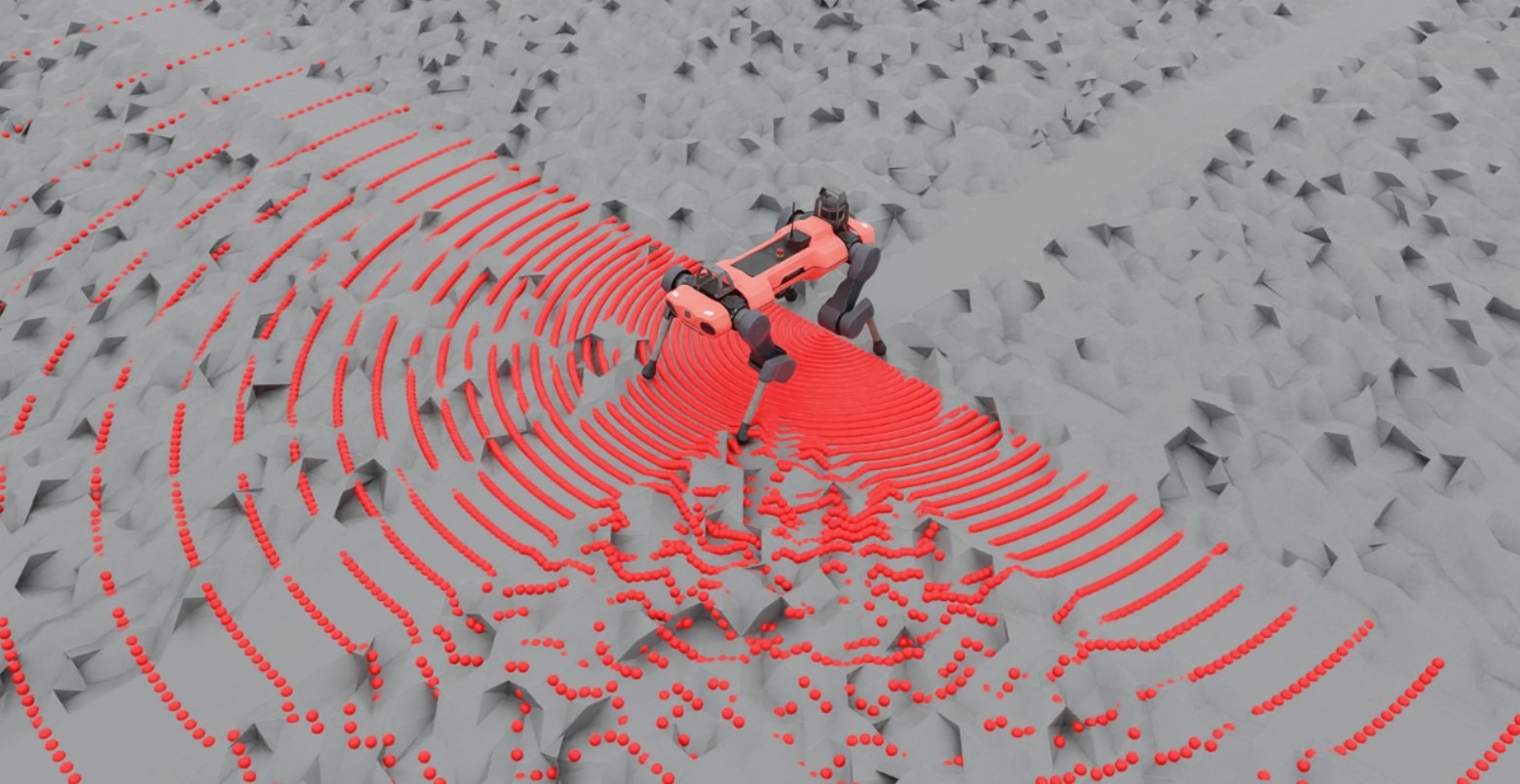

Ray Caster传感器(以及ray caster相机)与基于RTX的渲染类似,二者都涉及到射线投射。不同之处在于,Ray Caster传感器投射的射线严格返回沿投射路径的碰撞信息,并且每条射线的方向可以被指定。它们不会反弹,也不受材质或不透明度等因素的影响。对于传感器指定的每条射线,会沿射线路径追踪一条线,并返回与指定网格的第一次碰撞位置。这是我们的一些四足动物示例中用于测量局部高度场的方法。

为了保持传感器在有多个克隆环境时的性能,线条追踪直接在 Warp 中完成。这就是为什么需要识别特定网格以进行碰撞的原因: 当传感器初始化时,warp 会将这些网格数据加载到设备上。因此,当前版本的传感器仅适用于字面上的静态网格(即 那些没有从其 USD 文件中指定的默认值发生变化的 网格)。这个限制将在未来的版本中移除。

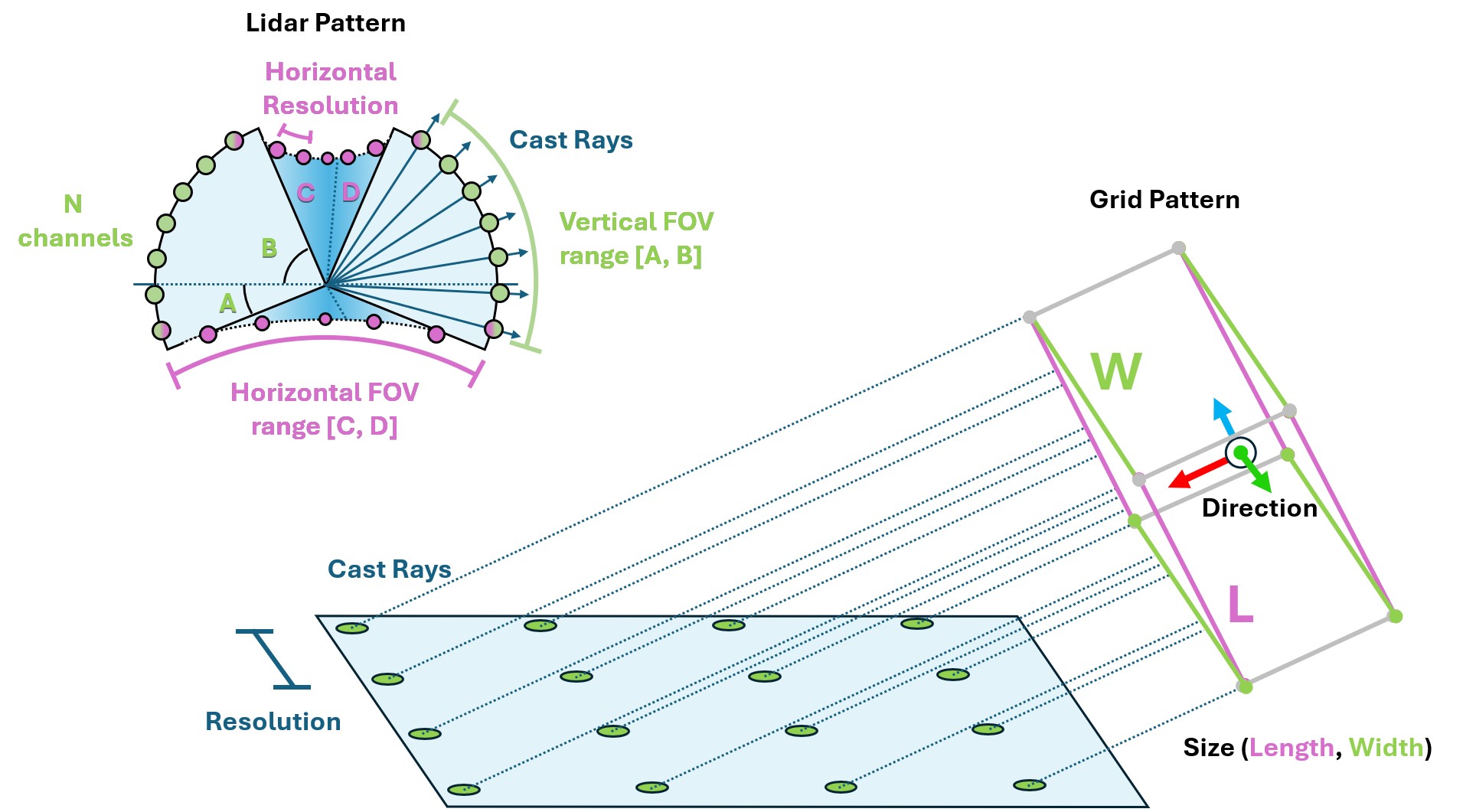

使用光线投射传感器需要附加一个 模式 和一个父级 xform。模式定义了光线如何投射,而 prim 属性定义了传感器的方向和位置(可以指定额外的偏移量以实现更精确的放置)。Isaac Lab 支持多种光线投射模式配置,包括通用的 LIDAR 和网格模式。

@configclass

class RaycasterSensorSceneCfg(InteractiveSceneCfg):

"""Design the scene with sensors on the robot."""

# ground plane

ground = AssetBaseCfg(

prim_path="/World/Ground",

spawn=sim_utils.UsdFileCfg(

usd_path=f"{ISAAC_NUCLEUS_DIR}/Environments/Terrains/rough_plane.usd",

scale=(1, 1, 1),

),

)

# lights

dome_light = AssetBaseCfg(

prim_path="/World/Light", spawn=sim_utils.DomeLightCfg(intensity=3000.0, color=(0.75, 0.75, 0.75))

)

# robot

robot = ANYMAL_C_CFG.replace(prim_path="{ENV_REGEX_NS}/Robot")

ray_caster = RayCasterCfg(

prim_path="{ENV_REGEX_NS}/Robot/base/lidar_cage",

update_period=1 / 60,

offset=RayCasterCfg.OffsetCfg(pos=(0, 0, 0.5)),

mesh_prim_paths=["/World/Ground"],

attach_yaw_only=True,

pattern_cfg=patterns.LidarPatternCfg(

channels=100, vertical_fov_range=[-90, 90], horizontal_fov_range=[-90, 90], horizontal_res=1.0

),

debug_vis=not args_cli.headless,

)

注意,模式配置上的单位是以度为单位!另外,我们在这里启用了可视化功能,以便在渲染中明确显示模式,但这不是必需的,并且在性能调优时应该禁用。

查询传感器的数据可以像其他传感器一样在仿真运行时进行。

def run_simulator(sim: sim_utils.SimulationContext, scene: InteractiveScene):

.

.

.

# Simulate physics

while simulation_app.is_running():

.

.

.

# print information from the sensors

print("-------------------------------")

print(scene["ray_caster"])

print("Ray cast hit results: ", scene["ray_caster"].data.ray_hits_w)

-------------------------------

Ray-caster @ '/World/envs/env_.*/Robot/base/lidar_cage':

view type : <class 'isaacsim.core.prims.xform_prim.XFormPrim'>

update period (s) : 0.016666666666666666

number of meshes : 1

number of sensors : 1

number of rays/sensor: 18000

total number of rays : 18000

Ray cast hit results: tensor([[[-0.3698, 0.0357, 0.0000],

[-0.3698, 0.0357, 0.0000],

[-0.3698, 0.0357, 0.0000],

...,

[ inf, inf, inf],

[ inf, inf, inf],

[ inf, inf, inf]]], device='cuda:0')

-------------------------------

在这里,我们可以看到传感器本身返回的数据。首先请注意,开始和结束处有 3 个闭括号: 这是因为返回的数据是按传感器的数量进行分批的。射线投射模式本身也已经被压平,因此数组的维度是 [N, B, 3] ,其中 N 是传感器的数量,B 是模式中投射的射线数量,3 是投射空间的维度。最后,请注意,这个投射模式中的前几个值是相同的: 这是因为 lidar 模式是球形的,我们已将视场(FOV)指定为半球形,这包括了极点。在这种配置下, “压平模式” 变得显而易见: 前 180 个条目将是相同的,因为它是该半球的底极,并且会有 180 个条目,因为我们的水平视场是 180 度,分辨率为 1 度。

您可以使用此脚本来实验不同的模式配置,并通过修改第 81 行的 triggered 变量来建立对数据存储方式的直觉。

raycaster_sensor.py 的代码

1# Copyright (c) 2022-2025, The Isaac Lab Project Developers.

2# All rights reserved.

3#

4# SPDX-License-Identifier: BSD-3-Clause

5

6import argparse

7import numpy as np

8

9from isaaclab.app import AppLauncher

10

11# add argparse arguments

12parser = argparse.ArgumentParser(description="Tutorial on adding sensors on a robot.")

13parser.add_argument("--num_envs", type=int, default=1, help="Number of environments to spawn.")

14# append AppLauncher cli args

15AppLauncher.add_app_launcher_args(parser)

16# parse the arguments

17args_cli = parser.parse_args()

18

19# launch omniverse app

20app_launcher = AppLauncher(args_cli)

21simulation_app = app_launcher.app

22

23"""Rest everything follows."""

24

25import torch

26

27import isaaclab.sim as sim_utils

28from isaaclab.assets import AssetBaseCfg

29from isaaclab.scene import InteractiveScene, InteractiveSceneCfg

30from isaaclab.sensors.ray_caster import RayCasterCfg, patterns

31from isaaclab.utils import configclass

32from isaaclab.utils.assets import ISAAC_NUCLEUS_DIR

33

34##

35# Pre-defined configs

36##

37from isaaclab_assets.robots.anymal import ANYMAL_C_CFG # isort: skip

38

39

40@configclass

41class RaycasterSensorSceneCfg(InteractiveSceneCfg):

42 """Design the scene with sensors on the robot."""

43

44 # ground plane

45 ground = AssetBaseCfg(

46 prim_path="/World/Ground",

47 spawn=sim_utils.UsdFileCfg(

48 usd_path=f"{ISAAC_NUCLEUS_DIR}/Environments/Terrains/rough_plane.usd",

49 scale=(1, 1, 1),

50 ),

51 )

52

53 # lights

54 dome_light = AssetBaseCfg(

55 prim_path="/World/Light", spawn=sim_utils.DomeLightCfg(intensity=3000.0, color=(0.75, 0.75, 0.75))

56 )

57

58 # robot

59 robot = ANYMAL_C_CFG.replace(prim_path="{ENV_REGEX_NS}/Robot")

60

61 ray_caster = RayCasterCfg(

62 prim_path="{ENV_REGEX_NS}/Robot/base/lidar_cage",

63 update_period=1 / 60,

64 offset=RayCasterCfg.OffsetCfg(pos=(0, 0, 0.5)),

65 mesh_prim_paths=["/World/Ground"],

66 attach_yaw_only=True,

67 pattern_cfg=patterns.LidarPatternCfg(

68 channels=100, vertical_fov_range=[-90, 90], horizontal_fov_range=[-90, 90], horizontal_res=1.0

69 ),

70 debug_vis=not args_cli.headless,

71 )

72

73

74def run_simulator(sim: sim_utils.SimulationContext, scene: InteractiveScene):

75 """Run the simulator."""

76 # Define simulation stepping

77 sim_dt = sim.get_physics_dt()

78 sim_time = 0.0

79 count = 0

80

81 triggered = True

82 countdown = 42

83

84 # Simulate physics

85 while simulation_app.is_running():

86

87 if count % 500 == 0:

88 # reset counter

89 count = 0

90 # reset the scene entities

91 # root state

92 # we offset the root state by the origin since the states are written in simulation world frame

93 # if this is not done, then the robots will be spawned at the (0, 0, 0) of the simulation world

94 root_state = scene["robot"].data.default_root_state.clone()

95 root_state[:, :3] += scene.env_origins

96 scene["robot"].write_root_pose_to_sim(root_state[:, :7])

97 scene["robot"].write_root_velocity_to_sim(root_state[:, 7:])

98 # set joint positions with some noise

99 joint_pos, joint_vel = (

100 scene["robot"].data.default_joint_pos.clone(),

101 scene["robot"].data.default_joint_vel.clone(),

102 )

103 joint_pos += torch.rand_like(joint_pos) * 0.1

104 scene["robot"].write_joint_state_to_sim(joint_pos, joint_vel)

105 # clear internal buffers

106 scene.reset()

107 print("[INFO]: Resetting robot state...")

108 # Apply default actions to the robot

109 # -- generate actions/commands

110 targets = scene["robot"].data.default_joint_pos

111 # -- apply action to the robot

112 scene["robot"].set_joint_position_target(targets)

113 # -- write data to sim

114 scene.write_data_to_sim()

115 # perform step

116 sim.step()

117 # update sim-time

118 sim_time += sim_dt

119 count += 1

120 # update buffers

121 scene.update(sim_dt)

122

123 # print information from the sensors

124 print("-------------------------------")

125 print(scene["ray_caster"])

126 print("Ray cast hit results: ", scene["ray_caster"].data.ray_hits_w)

127

128 if not triggered:

129 if countdown > 0:

130 countdown -= 1

131 continue

132 data = scene["ray_caster"].data.ray_hits_w.cpu().numpy()

133 np.save("cast_data.npy", data)

134 triggered = True

135 else:

136 continue

137

138

139def main():

140 """Main function."""

141

142 # Initialize the simulation context

143 sim_cfg = sim_utils.SimulationCfg(dt=0.005, device=args_cli.device)

144 sim = sim_utils.SimulationContext(sim_cfg)

145 # Set main camera

146 sim.set_camera_view(eye=[3.5, 3.5, 3.5], target=[0.0, 0.0, 0.0])

147 # design scene

148 scene_cfg = RaycasterSensorSceneCfg(num_envs=args_cli.num_envs, env_spacing=2.0)

149 scene = InteractiveScene(scene_cfg)

150 # Play the simulator

151 sim.reset()

152 # Now we are ready!

153 print("[INFO]: Setup complete...")

154 # Run the simulator

155 run_simulator(sim, scene)

156

157

158if __name__ == "__main__":

159 # run the main function

160 main()

161 # close sim app

162 simulation_app.close()