Depth Sensors#

Stereoscopic Depth Cameras#

Single-View Post-Processing Pipeline#

Isaac Sim models stereoscopic depth cameras using a single camera view through the isaacsim.sensors.camera.SingleViewDepthSensor class. This class wraps around isaacsim.sensors.camera.Camera, and

includes APIs for configuring a post-processing pipeline for stereoscopic depth estimation from a single Camera prim. The process by which the renderer models disparity and noise from a single camera view

is described in detail here.

Standalone Python#

Check out the standalone example located at standalone_examples/api/isaacsim.sensors.camera/camera_stereoscopic_depth.py for an example of how to use the isaacsim.sensors.camera.SingleViewDepthSensor class

and the new Annotators provided in Replicator.

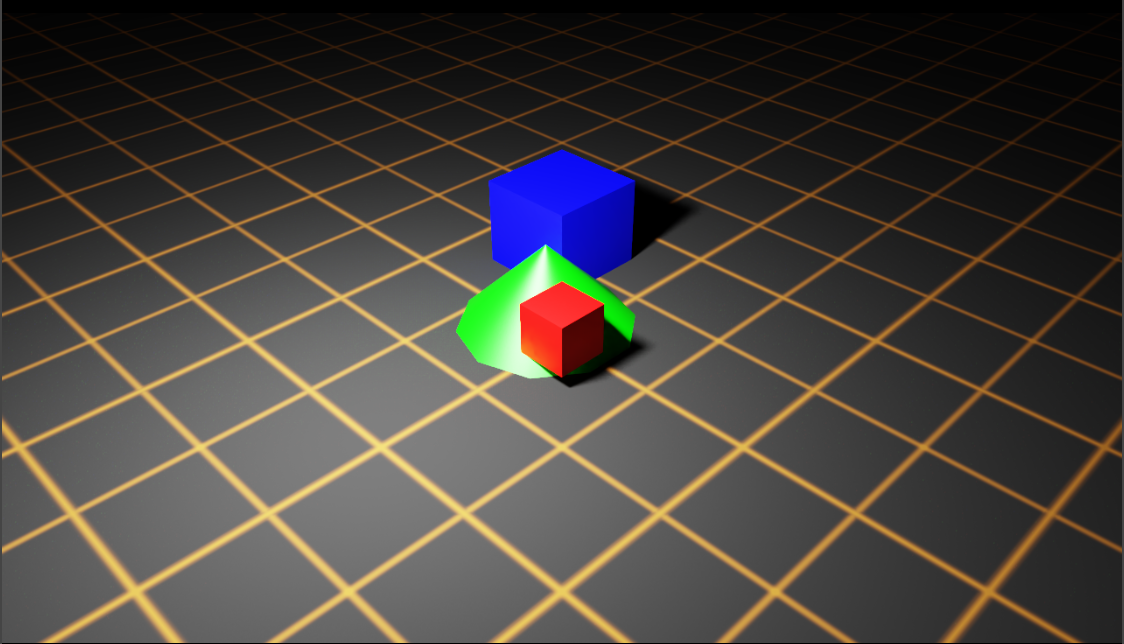

When running the standalone example, a basic set of colored shapes in the Black Grid environment are in the viewport, like below:

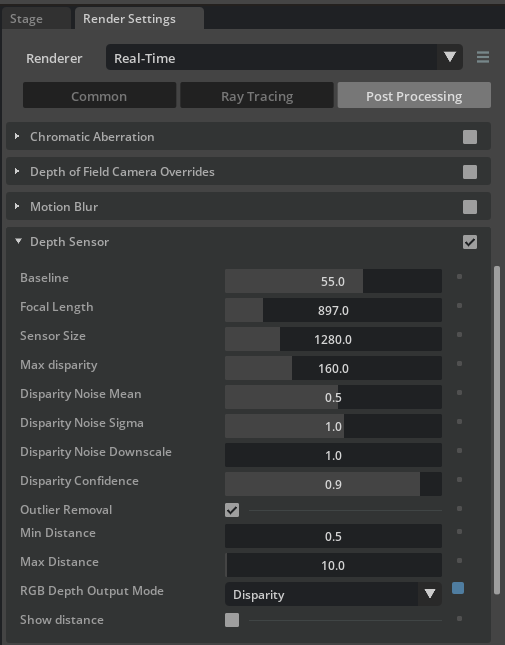

Now, examine the disparity map generated by the depth sensor as follows:

Select the camera render product in the viewport.

Click Render Settings > Post Processing > Depth Sensor to examine the depth sensor post-processing pipeline settings.

Tick the checkbox for Depth Sensor.

Select Disparity from the RGB Depth Output Mode dropdown.

The settings will look like the following:

Note

To learn more about these Post Processing settings, visit Single View Depth Camera documentation.

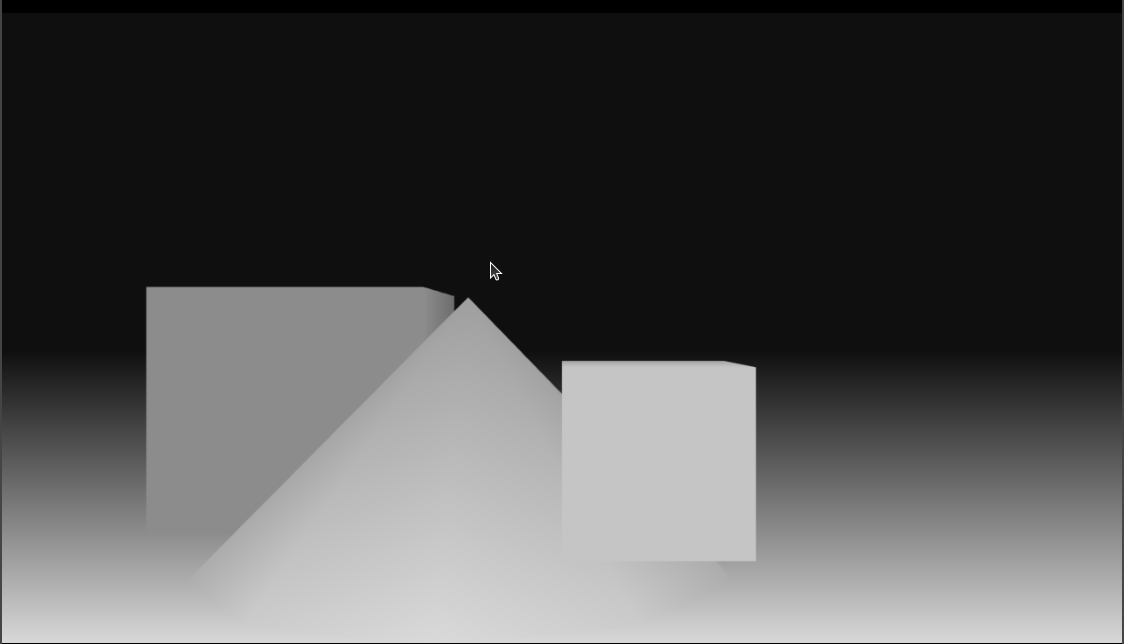

Verify that you see the disparity map in the viewport, like below:

Note

Any settings under Render Settings > Post Processing > Depth Sensor will be applied to all render products in the scene (including the viewport). The isaacsim.sensors.camera.SingleViewDepthSensor class

enables configuration of individual render products as depth sensors.

Close the Isaac Sim UI and rerun the standalone example as follows:

./python.sh standalone_examples/api/isaacsim.sensors.camera/camera_stereoscopic_depth.py --test

Isaac Sim will now run the standalone example in headless mode and generate the following output from Annotators

attached to the camera render product. The first image is output from the DepthSensorDistance Annotator (depth_sensor_distance.png), and the

second image is output from the DistanceToImagePlane Annotator (distance_to_image_plane.png).

Warning

When using any of the new depth AOVs, you might see the following (or similar) errors:

[Error] [rtx.postprocessing.plugin] DepthSensor: Texture sizes do not match: inColorTexDesc 1920x1080x1:11@0 inDepthTexDesc 1500x843x1:33@0

[Error] [rtx.postprocessing.plugin] DepthSensor: Failed to allocate view resources for view 1 device 0

[Error] [carb.scenerenderer-rtx.plugin] Failed to export AOV 38 to render product. The renderer did not generate the AOV texture

These errors are expected for the first frame of the depth simulation and will be corrected in a future release.

Depth Camera Asset Wrapper#

Isaac Sim supports several official Depth Sensors. These can be automatically loaded as references on a stage

using the isaacsim.sensors.camera.SingleViewDepthSensorAsset class. This API will search the asset for RenderProduct``prims specifying single-view depth

sensor characteristics, tailored for a specific camera in the asset, then wrap those ``Camera prims as SingleViewDepthSensor instances. By loading the

asset in this manner, you will have full control over the post-processing pipeline for each depth sensor in the asset, and can attach any number of Annotators

to the SingleViewDepthSensor instances through its API.

Note

Attribute specification for Camera prims in the official assets linked above are tentative, and can change in future asset updates or releases.

Script Editor#

As an example, you can load the Intel Realsense D455 depth camera asset and attach an annotator to the depth sensor by running the following snippet in the Script Editor:

from isaacsim.sensors.camera import SingleViewDepthSensorAsset

from isaacsim.storage.native import get_assets_root_path

# Add Realsense D455 to the stage

asset_path = get_assets_root_path() + "/Isaac/Sensors/Intel/RealSense/rsd455.usd"

realsense_d455 = SingleViewDepthSensorAsset(prim_path="/World/realsense_d455", asset_path=asset_path)

# Initialize all depth sensor prims in the asset, creating render products

# attached to HydraTextures for each.

realsense_d455.initialize()

# Print prim paths for all available depth sensors in the asset

print(realsense_d455.get_all_depth_sensor_paths())

# Get a specific depth sensor by camera prim path

depth_sensor = realsense_d455.get_child_depth_sensor("/World/realsense_d455/RSD455/Camera_Pseudo_Depth")

# Attach an Annotator to the depth sensor

depth_sensor.attach_annotator("DepthSensorDistance")

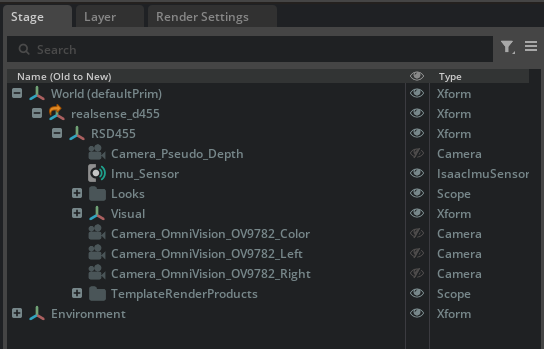

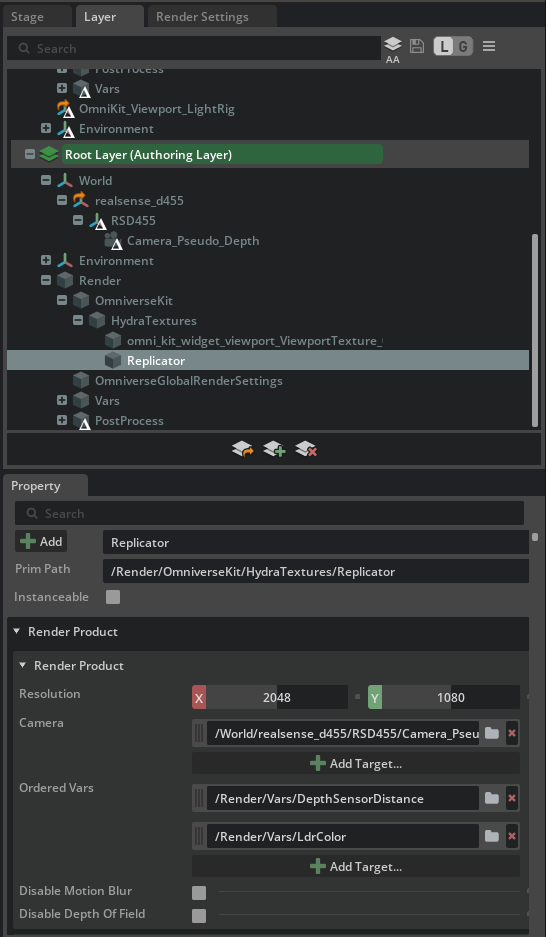

Observe the Stage window indicates the Realsense D455 depth camera asset has been loaded.

Next, observer the Layer window indicates the appropriate RenderProduct prim has been created, with a

HydraTexture and DepthSensorDistance RenderVar attached:

Building a Depth Sensor Model in Isaac Sim#

Updating Existing Assets to Use Depth Sensors#

Isaac Sim provides a convenient API to update an existing asset to use depth sensors using the isaacsim.sensors.camera.SingleViewDepthSensorAsset class. The following example demonstrates how to update a new Camera prim

as a depth sensor, then export it as a USD file that can be loaded as a reference in other stages using isaacsim.sensors.camera.SingleViewDepthSensorAsset.

./python.sh standalone_examples/api/isaacsim.sensors.camera/camera_add_depth_sensor.py

Running the example will create a new example_camera_with_depth_sensor.usd asset in the local directory.

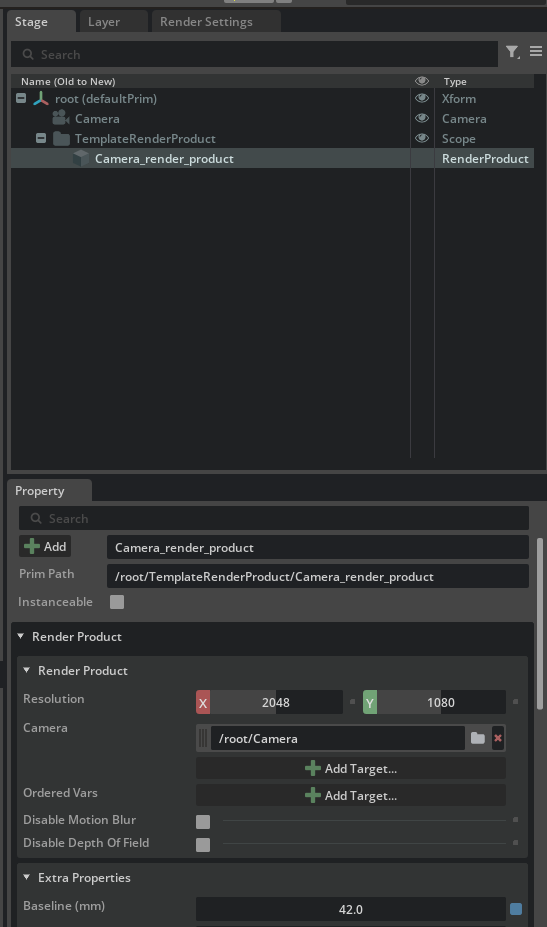

After opening the new asset in Isaac Sim, observe the following in the Stage window:

Observe the new render product prim has been created and associated to the Camera prim, with the custom

value set for the omni:rtx:post:depthSensor:baselineMM attribute.

Open a new stage, and run the following snippet in the Script Editor to load the new asset as a reference:

from isaacsim.sensors.camera import SingleViewDepthSensorAsset

asset_path = "example_camera_with_depth_sensor.usd"

example_depth_sensor = SingleViewDepthSensorAsset(prim_path="/example_depth_sensor", asset_path=asset_path)

example_depth_sensor.initialize()

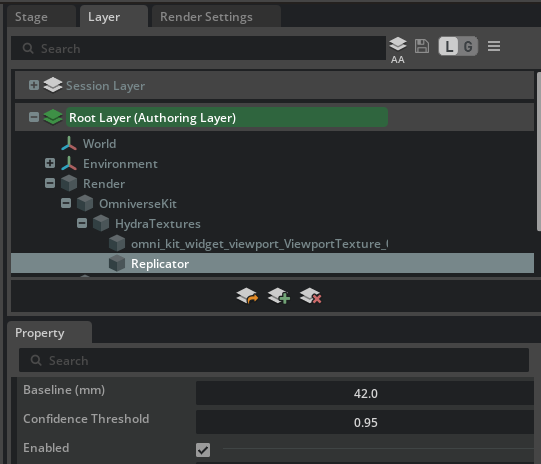

Observe in the Layer window the new render product is appropriately created, with the custom value set for the omni:rtx:post:depthSensor:baselineMM attribute.

Creating a New Depth Sensor Asset#

As noted earlier, the Single-View Post-Processing Pipeline is intended to model stereoscopic depth cameras specifically, not (eg.) time-of-flight sensors or structured light sensors. This section will link to other sections of Isaac Sim documentation to describe a general process for building a new stereoscopic depth sensor model, but should not be used as a template for other types of depth sensors.

Use any of the supported Isaac Sim Importers and Exporters to import an existing model of the depth sensor into USD.

Add

Cameraprims to appropriate locations in the model and save the asset.Build a test environment in USD, positioning objects and the depth sensor in the environment to accurately model a real-world test rig.

If using OpenCV to calibrate the real-world cameras, apply the OpenCV lens distortion schemas to the

Cameraprims, as described in Calibration and Camera Lens Distortion Models.Calibrate camera intrinsics and extrinsics for each Camera prim by comparing rendered images to real-world images and tuning Camera prim attributes.

When the camera intrinsics and extrinsics are calibrated, refer to examples in Standalone Python to script the post-processing pipeline: apply the depth sensor schema to a render product attached to the depth sensor

Cameraprim, set attributes, render a depth image, and compare the rendered depth image to the real-world depth image. Update depth sensor schema attributes, and repeat the process until the rendered depth image matches the real-world depth image within some acceptable threshold.